Instant 3D Photography

|

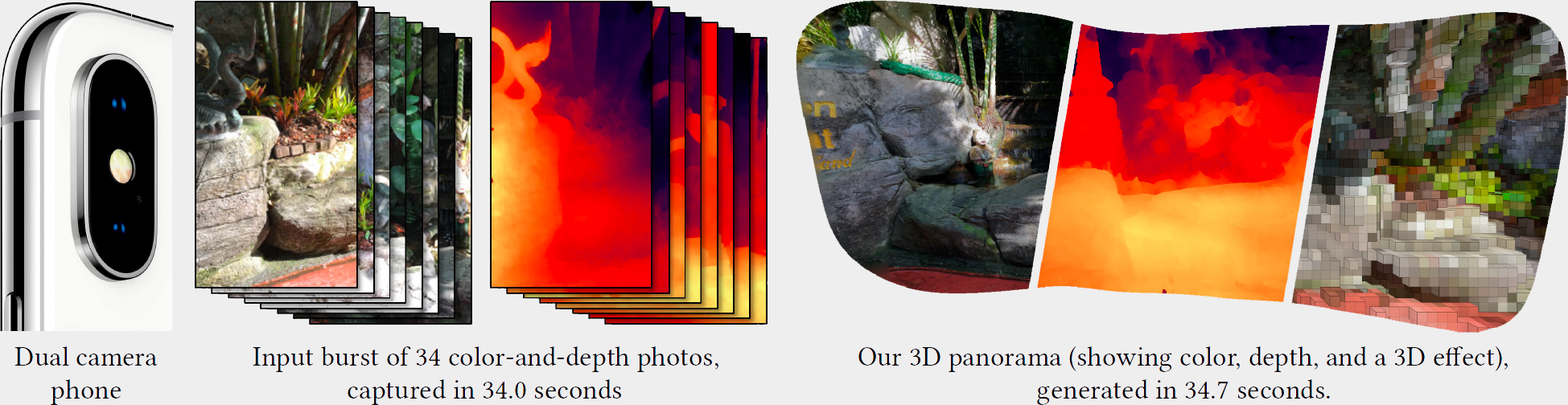

| Our work enables practical and casual 3D capture with regular dual camera cell phones. Left: A burst of input color-and-depth image pairs that we captured with a dual camera cell phone at a rate of one image per second. Right: 3D panorama generated with our algorithm in about the same time it took to capture. The geometry is highly detailed and enables viewing with binocular and motion parallax in VR, as well as applying 3D effects that interact with the scene, e.g., through occlusions (right). |

Abstract

We present an algorithm for constructing 3D panoramas from a sequence of aligned color-and-depth image pairs. Such sequences can be conveniently captured using dual lens cell phone cameras that reconstruct depth maps from synchronized stereo image capture. Due to the small baseline and resulting triangulation error the depth maps are considerably degraded and contain low-frequency error, which prevents alignment using simple global transformations. We propose a novel optimization that jointly estimates the camera poses as well as spatially-varying adjustment maps that are applied to deform the depth maps and bring them into good alignment. When fusing the aligned images into a seamless mosaic we utilize a carefully designed data term and the high quality of our depth alignment to achieve two orders of magnitude speedup w.r.t. previous solutions that rely on discrete optimization by removing the need for label smoothness optimization. Our algorithm processes about one input image per second, resulting in an endto- end runtime of about one minute for mid-sized panoramas. The final 3D panoramas are highly detailed and can be viewed with binocular and head motion parallax in VR.

BibTeX

@article{Instant3D2018,

author = {Peter Hedman and Johannes Kopf},

title = {{Instant 3D Photography}},

booktitle = {ACM Transactions on Graphics (Proc. SIGGRAPH)},

publisher = {ACM},

volume = {37},

number = {4},

pages = {101:1--101:12},

year = {2018}

}

Acknowledgements

The authors would like to thank Clément Godard for generating the depth maps for the CNN evaluation and Suhib Alsisan for developing the capture app.

Paper PDF (22.3 MB)

Paper PDF (22.3 MB)

Supplemental material

Supplemental material

Implementation details (6 MB)

Implementation details (6 MB)