Casual 3D Photography

|

|

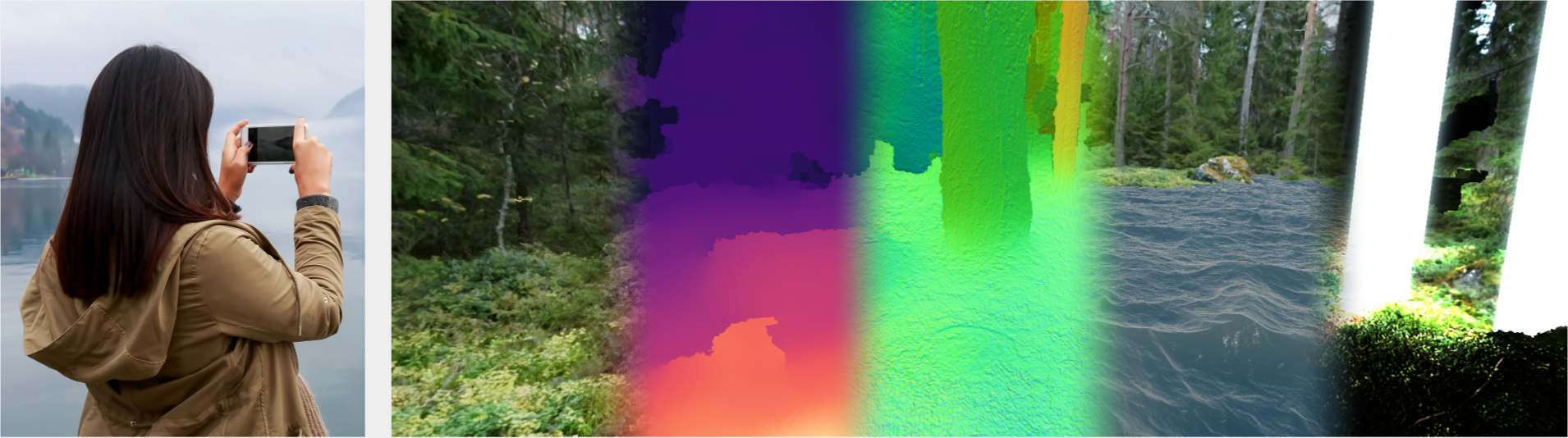

| Our algorithm reconstructs a 3D photo, i.e., a multi-layered panoramic mesh with reconstructed surface color, depth, and normals, from casually captured cell phone or DSLR images. It can be viewed with full binocular and motion parallax in VR as well as on a regular mobile device or in a Web browser. The reconstructed depth and normals allow interacting with the scene through geometry-aware and lighting effects. |

Abstract

We present an algorithm that enables casual 3D photography. Given a set of input photos captured with a hand-held cell phone or DSLR camera, our algorithm reconstructs a 3D photo, a central panoramic, textured, normal mapped, multi-layered geometric mesh representation. 3D photos can be stored compactly and are optimized for being rendered from viewpoints that are near the capture viewpoints. They can be rendered using a standard rasterization pipeline to produce perspective views with motion parallax. When viewed in VR, 3D photos provide geometrically consistent views for both eyes. Our geometric representation also allows interacting with the scene using 3D geometry-aware effects, such as adding new objects to the scene and artistic lighting effects.

Our 3D photo reconstruction algorithm starts with a standard structure from motion and multi-view stereo reconstruction of the scene. The dense stereo reconstruction is made robust to the imperfect capture conditions using a novel near envelope cost volume prior that discards erroneous near depth hypotheses. We propose a novel parallax-tolerant stitching algorithm that warps the depth maps into the central panorama and stitches two color-and-depth panoramas for the front and back scene surfaces. The two panoramas are fused into a single non-redundant, well-connected geometric mesh. We provide videos demonstrating users interactively viewing and manipulating our 3D photos.

BibTeX

@article{Casual3D2017,

author = {Peter Hedman and Suhib Alsisan and Richard Szeliski and Johannes Kopf},

title = {{Casual 3D Photography}},

booktitle = {ACM Transactions on Graphics (Proc. SIGGRAPH Asia)},

publisher = {ACM},

volume = {36},

number = {6},

pages = {234:1--234:15},

year = {2017}

}

Acknowledgements

The authors would like to thank Scott Wehrwein, Tianfan Xue, Jan-Michael Frahm and Kevin Matzen for helpful comments and discussion.

Paper PDF (6.6 MB)

Paper PDF (6.6 MB)

Slides (1 GB)

Slides (1 GB)

Supplemental material

Supplemental material