RecurBot:

Learn to Auto-complete GUI Tasks From Human Demonstrations

and

University College London

30s Introduction

More examples

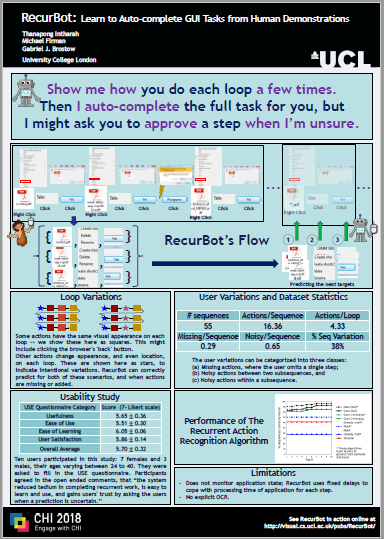

On the surface, task-completion should be easy in graphical user interface (GUI) settings. In practice however, different actions look alike and applications run in operating-system silos. Our aim within GUI action recognition and prediction is to help the user, at least in completing the tedious tasks that are largely repetitive. We propose a method that learns from a few user-performed demonstrations, and then predicts and finally performs the remaining actions in the task. For example, a user can send customized SMS messages to the first three contacts in a school's spreadsheet of parents; then our system loops the process, iterating through the remaining parents.

First, our analysis system segments the demonstration into discrete loops, where each iteration usually included both intentional and accidental variations. Our technical innovation approach is a solution to the standing motif-finding optimization problem, but we also find visual patterns in those intentional variations. The second challenge is to predict subsequent GUI actions, extrapolating the patterns to allow our system to predict and perform the rest of a task. We validate our approach on a new database of GUI tasks, and show that our system usually (a) gleans what it needs from short user demonstrations, and (b) auto-completes tasks in diverse GUI situations.

BibTeX

@inproceedings{IntharahRecurBot2018,

author = {Intharah, Thanapong and Firman, Michael and Brostow, Gabriel J.},

title = {RecurBot:Learn to Auto-complete GUI Tasks From Human Demonstrations},

booktitle = {Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems},

series = {CHI EA '18},

year = {2018},

location = {Montreal, Canada},

publisher = {ACM},

}

Acknowledgements

The authors were supported by the Royal Thai Government Scholarship, and NERC NE/P019013/1.