Harmonic Networks: Deep Translation and Rotation Equivariance

University College London

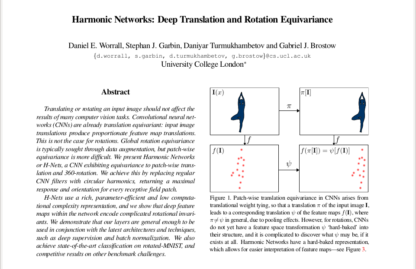

Regular CNN

CNN - HNet Comparison

HNet

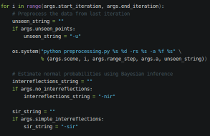

Abstract Translating or rotating an input image should not affect the results of many computer vision tasks. Convolutional neural networks (CNNs) are already translation equivariant: input image translations produce proportionate feature map translations. This is not the case for rotations. Global rotation equivariance is typically sought through data augmentation, but patch-wise equivariance is more difficult. We present Harmonic Networks or H-Nets, a CNN exhibiting equivariance to patch-wise translation and 360-rotation. We achieve this by replacing regular CNN filters with circular harmonics, returning a maximal response and orientation for every receptive field patch. H-Nets use a rich, parameter-efficient and low computational complexity representation, and we show that deep feature maps within the network encode complicated rotational invariants. We demonstrate that our layers are general enough to be used in conjunction with the latest architectures and techniques, such as deep supervision and batch normalization. We also achieve state-of-the-art classification on rotated-MNIST, and competitive results on other benchmark challenges.

@ARTICLE{worrall_hnets_2016,

author = {{Worrall}, D.~E. and {Garbin}, S.~J. and {Turmukhambetov}, D. and

{Brostow}, G.~J.},

title = "{Harmonic Networks: Deep Translation and Rotation Equivariance}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1612.04642},

primaryClass = "cs.CV",

keywords = {Computer Science - Computer Vision and Pattern Recognition, Computer Science - Learning, Statistics - Machine Learning},

year = 2016,

month = dec,

adsurl = {http://adsabs.harvard.edu/abs/2016arXiv161204642W},

adsnote = {Provided by the SAO/NASA Astrophysics Data System}

}

Paper - [4.3 MB]

Paper - [4.3 MB]

Github Code Repository

Github Code Repository