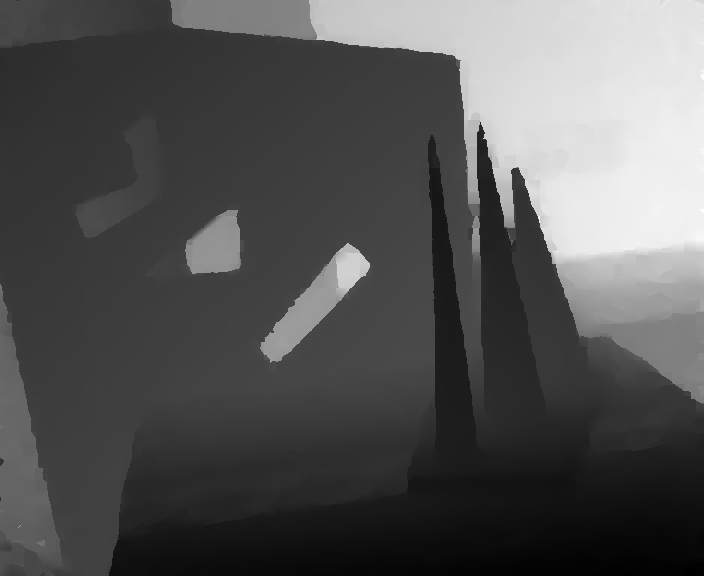

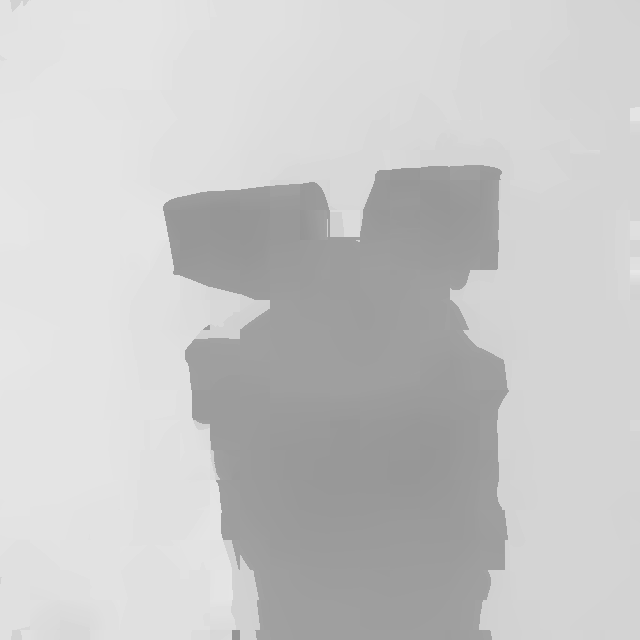

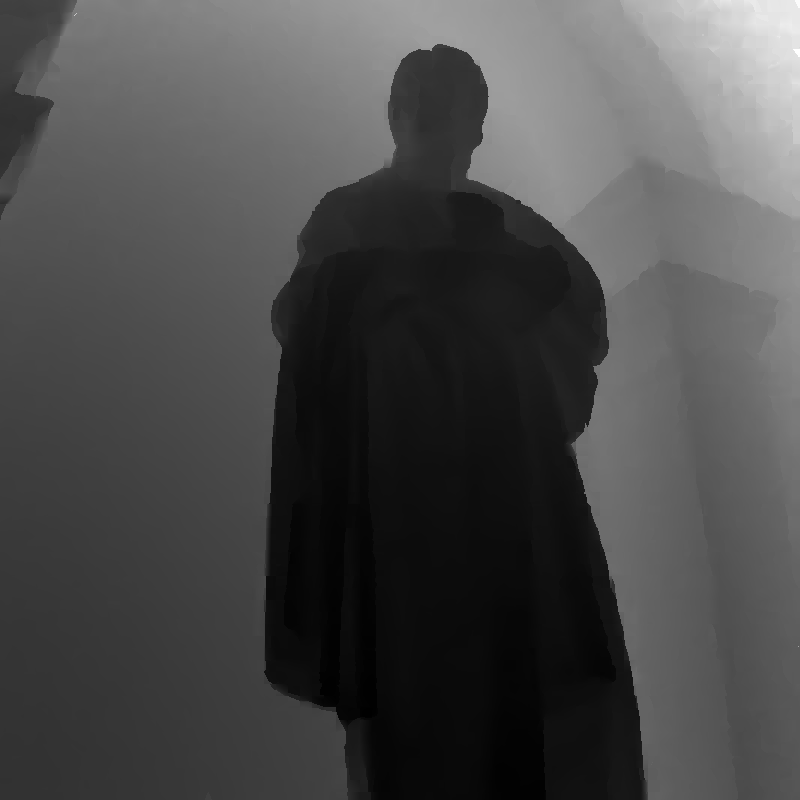

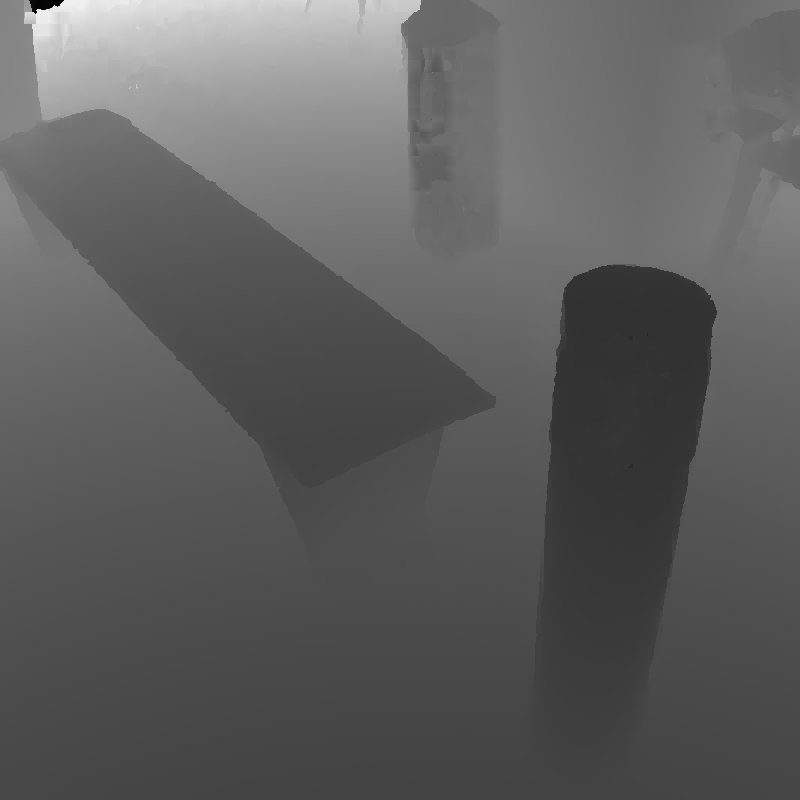

Output Image

|

Input Image

|

To switch between images please use the buttons on the right.

The methods that are available for comparison are using the following color code:

| Our Result |

| Freeman and Liu |

| Nearest Neighbour |

| Bicubic |

| SCSR |

| Q. Yang et al. |

| Bilateral and NN |

| Input 3D |

| Our Result - Brown: Using the Brown Range Database instead of synthetic data |

| Upsampling First: Patching is performed at high resolution, like in image approaches |

| Ground Truth |

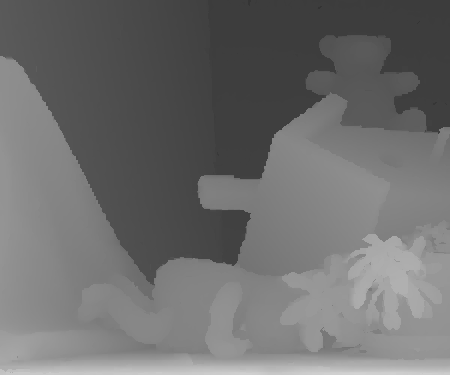

Output Image

|

Input Image

|

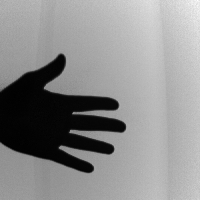

Output Image

|

Input Image

|

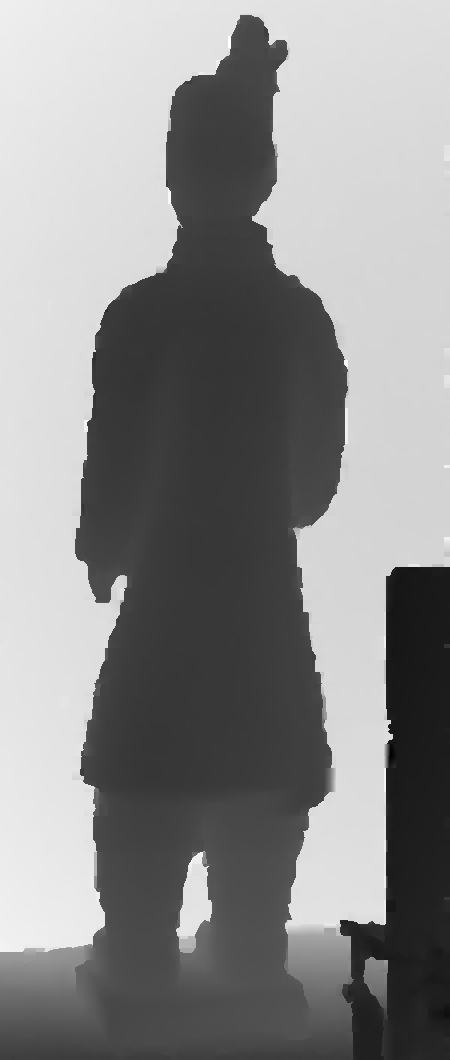

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

Output Image

|

Input Image

|

| Scene ID | Sensor Type | Preprocessing | Credits |

| 1 | PMD CamCube 2 | Bilateral Filter [5,3,0.1] | |

| 2 | Swiss Ranger 3000 ToF | Bilateral Filter [5,1.5,0.01] | [23] Schuon et al. - Lidarboost |

| 3 | Structured Light | [21] Scharstein et al. - Middlebury Stereo Dataset | |

| 4 | PMD CamCube 2 | Bilateral Filter [5,1.5,0.01] | |

| 5 | Kinect | Gaussian Blur [3 0.5] | |

| 6 | Canesta EP DevKit | Bilateral Filter [5,3,0.1] | [31] Q. Yang et al. |

| 7 | Canesta EP DevKit | Bilateral Filter [5,3,0.1] | [31] Q. Yang et al. |

| 8 | PMD CamCube 2 | Bilateral Filter [5,1.5,0.01] | |

| 9 | PMD CamCube 2 | Bilateral Filter [5,1.5,0.01] | |

| 10 | PMD CamCube 2 | Bilateral Filter [5,3,0.1] | |

| 11 | PMD CamCube 2 | Bilateral Filter [5,3,0.1] | |

| 12 | Laser Scanner | ||

| 13 | Structured Light | [21] Scharstein et al. - Middlebury Stereo Dataset | |

| 14 | Structured Light | [21] Scharstein et al. - Middlebury Stereo Dataset |