Modeling Object Appearance Using Context-Conditioned Component AnalysisAbstractSubspace models have been very successful at modeling the appearance of structured image datasets when the visual objects have been aligned in the images (e.g., faces). Even with extensions that allow for global transformations or dense warps of the image, the set of visual objects whose appearance may be modeled by such methods is limited. They are unable to account for visual objects where occlusion leads to changing visibility of different object parts (without a strict layered structure) and where a one-to-one mapping between parts is not preserved. For example bunches of bananas contain different numbers of bananas but each individual banana shares an appearance subspace. In this work we remove the image space alignment limitations of existing subspace models by conditioning the models on a shape dependent context that allows for the complex, non-linear structure of the appearance of the visual object to be captured and shared. This allows us to exploit the advantages of subspace appearance models with non-rigid, deformable objects whilst also dealing with complex occlusions and varying numbers of parts. We demonstrate the effectiveness of our new model with examples of structured inpainting and appearance transfer.Update: A typo was found in equations 14 and 16: both equations should have "argmin" instead of "argmax". We apologize for confusion. The implementation doesn't have this error, so the experiments section is valid. Publications

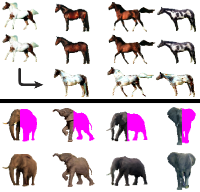

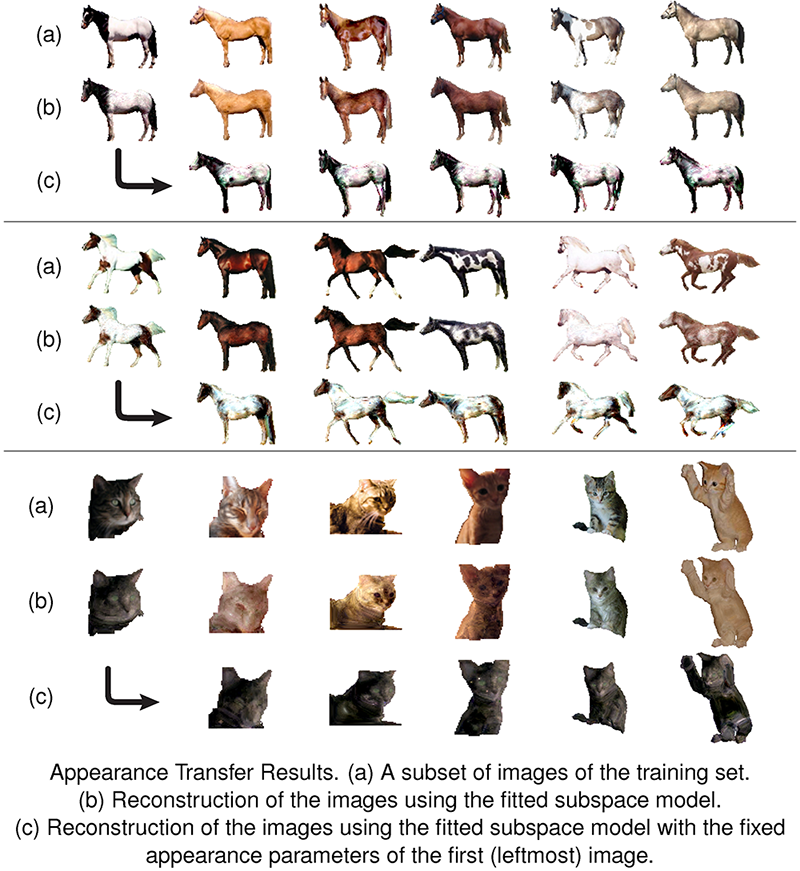

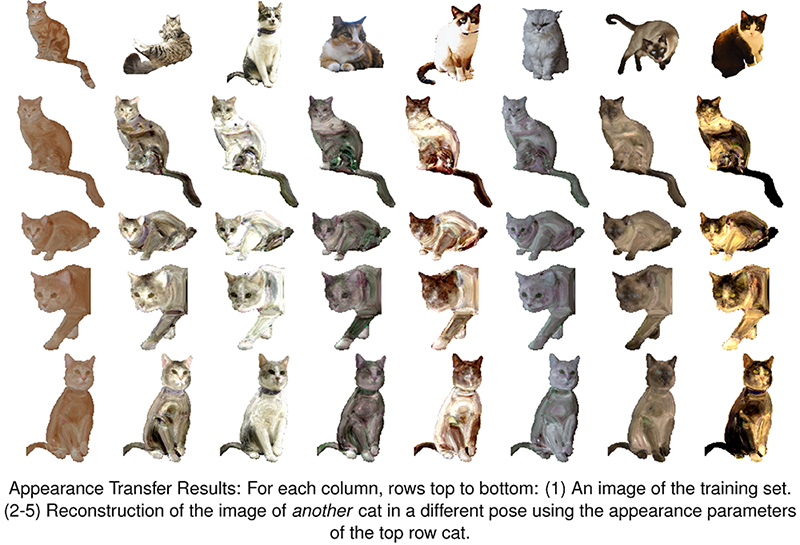

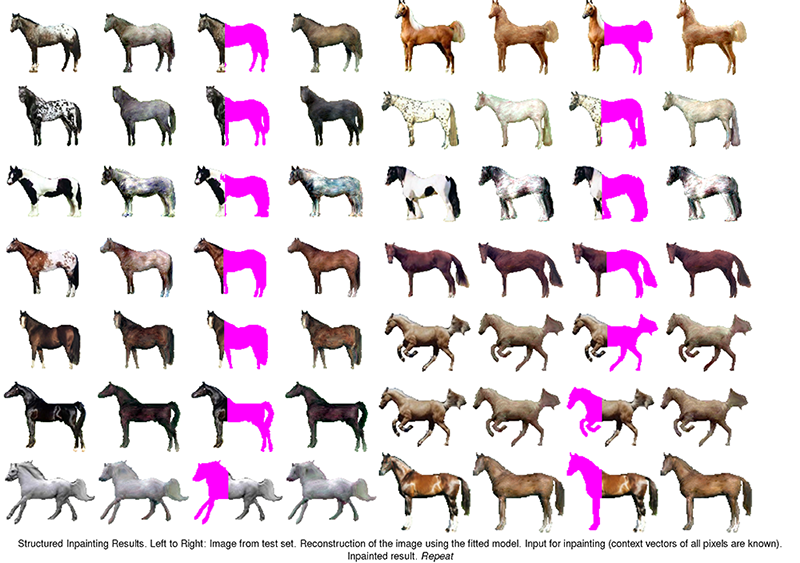

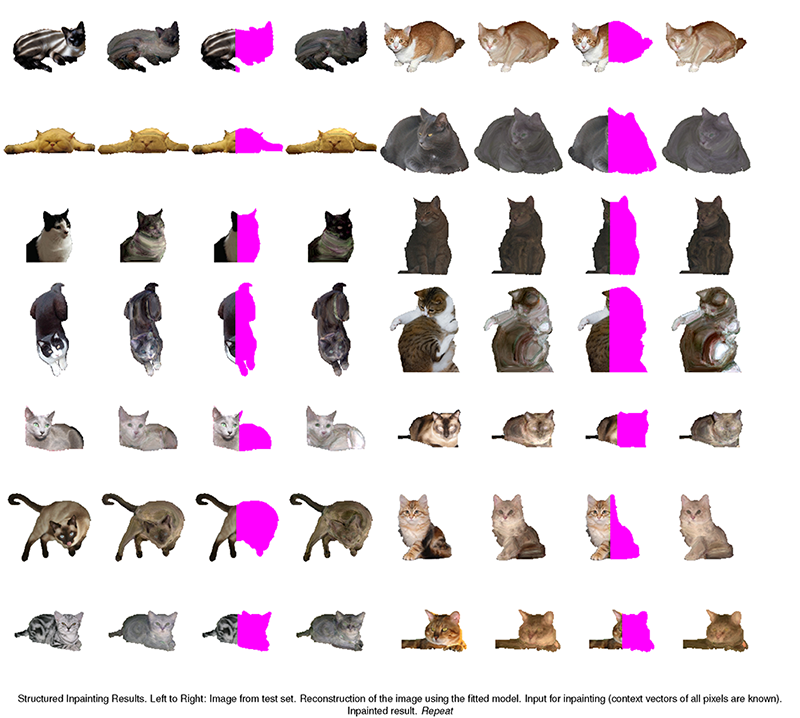

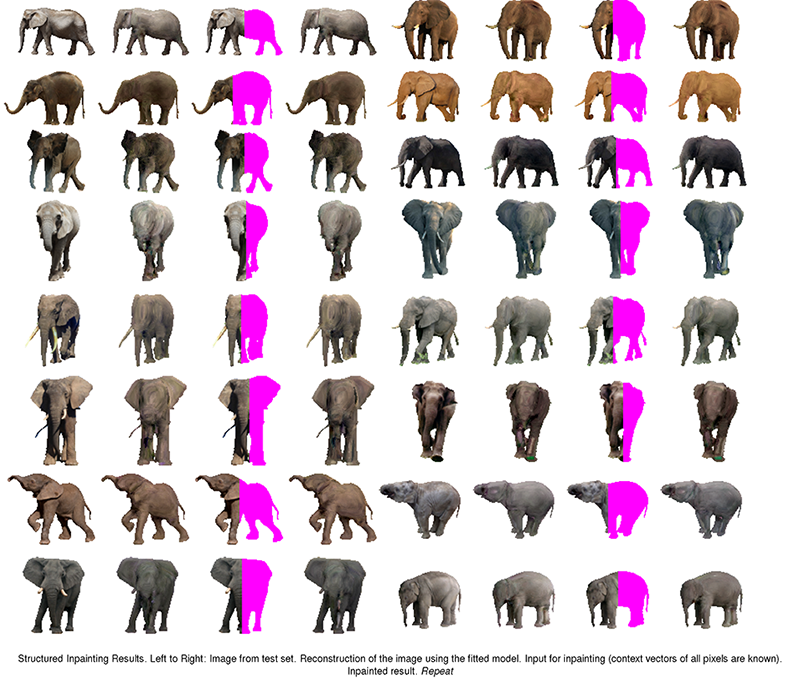

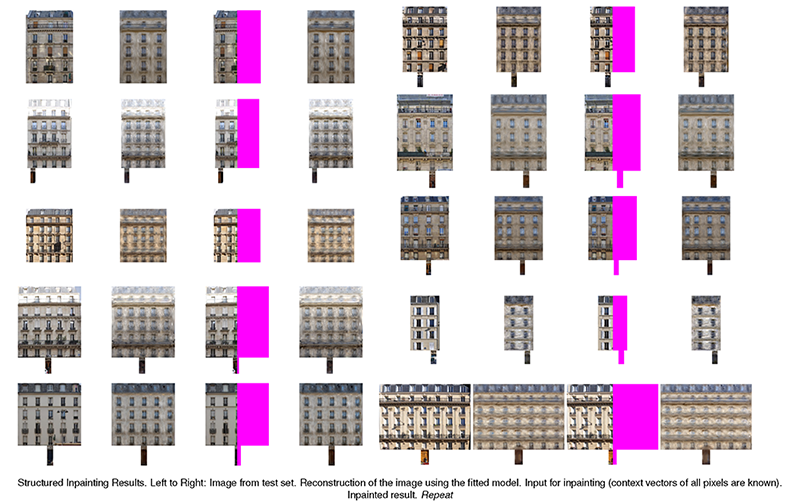

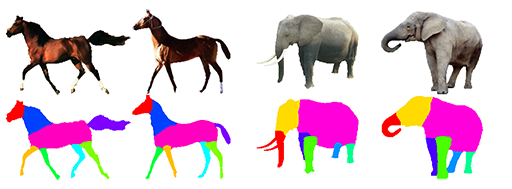

ResultsAppearance Transfer Results  Structured Inpainting Results    Dataset UCL Parts Dataset (285 Mb) CodePlease download from github repository.Contact: daniyar dot turmukhambetov dot 10 at ucl.ac.uk |