|

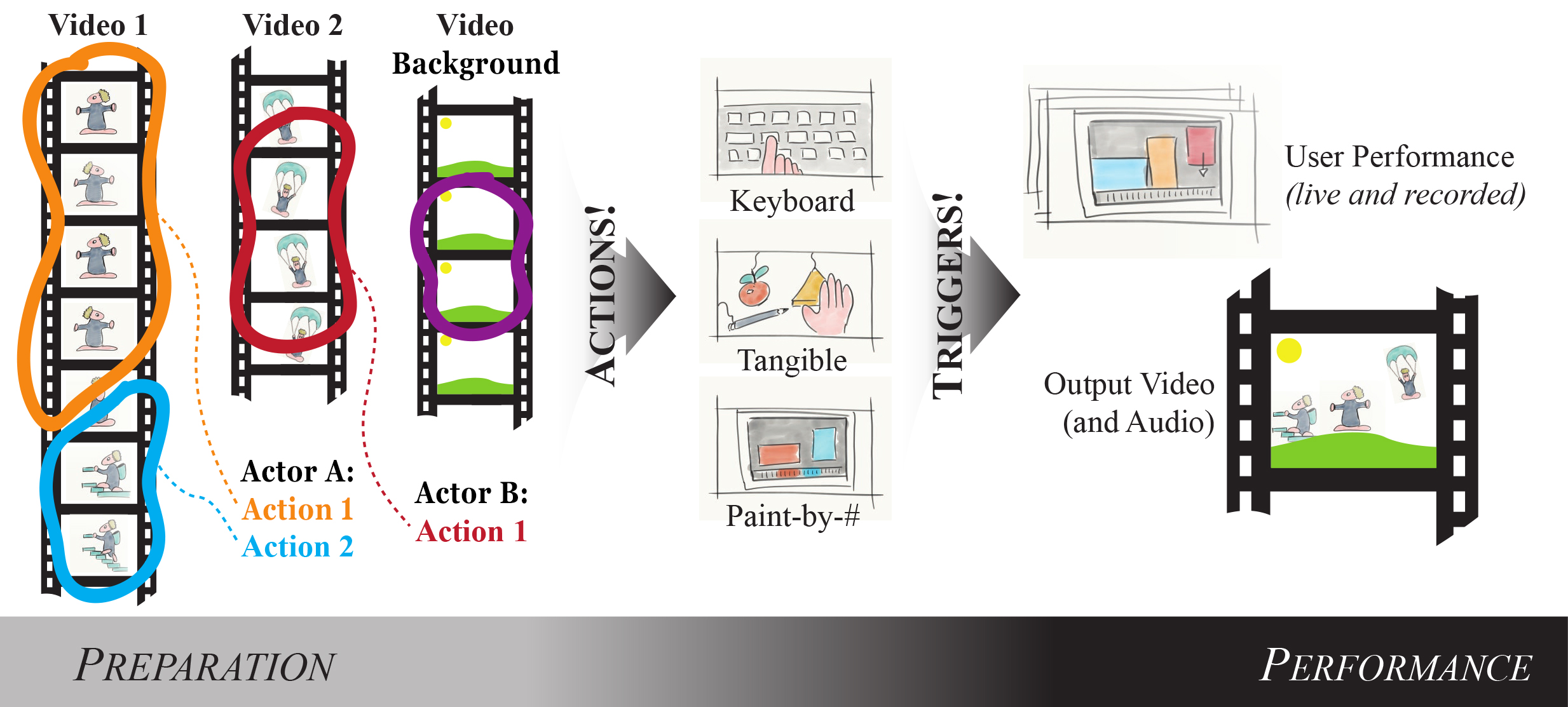

| Our system allows users to prepare raw videos to make an interactive live performance. Our end-to-end technology assists with finding and segmenting loopable actions in video inputs (orange, blue, red). Then, discrete but compatible actions can easily be triggered during a show. |

Abstract

We propose technology to enable a new medium of expression, where video elements can be looped, merged, and triggered, interactively. Like audio, video is easy to sample from the real world but hard to segment into clean reusable elements. Reusing a video clip means non-linear editing and compositing with novel footage. The new context dictates how carefully a clip must be prepared, so our end-to-end approach enables previewing and easy iteration.

We convert static-camera videos into loopable sequences, synthesizing them in response to simple end-user requests. This is hard because a) users want essentially semantic-level control over the synthesized video content, and b) automatic loop-finding is brittle and leaves users limited opportunity to work through problems. We propose a human-in-the-loop system where adding effort gives the user progressively more creative control. Artists help us evaluate how our trigger interfaces can be used for authoring of videos and video-performances.

Errata

The original accepted paper incorrectly cited [Henriques et al. 2015] as the tracker used within our system. The tracker we bundle with the system is the CMT tracker [Nebehay and Pflugfelder 2015] as reflected in the corrected copy downloadable from this page and on arXiv.

BibTeX

@inproceedings{Ilisescu:2017:RAV:3025453.3025880,

author = {Ilisescu, Corneliu and Kanaci, Halil Aytac and Romagnoli, Matteo and Campbell, Neill D. F. and Brostow, Gabriel J.},

title = {Responsive Action-based Video Synthesis},

booktitle = {Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems},

series = {CHI '17},

year = {2017},

isbn = {978-1-4503-4655-9},

location = {Denver, Colorado, USA},

pages = {6569--6580},

numpages = {12},

url = {http://doi.acm.org/10.1145/3025453.3025880},

doi = {10.1145/3025453.3025880},

acmid = {3025880},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {cinemagraphs, interactive machine learning, sprites, video editing, video textures},

}

Acknowledgements

We would like to thank Mike Terry for his invaluable feedback. Thanks to all the volunteers and artists for their availability during our validation phase, especially Tobias Noller, Evan Raskob and Ralph Wiedemeier. The authors are grateful for the support of EU project CR-PLAY (no 611089) www.cr-play.eu and EPSRC grants EP/K023578/1, EP/K015664/1 and EP/M023281/1.

Paper PDF

Paper PDF Presentation

Presentation Code

Code